In the spirit of a Let’s Play, I’m trying out a format where I read through an academic paper and interpret it as I go, like a discussion seminar of one. Too often I try to read things like this without making sure I understand, and understanding is measured by the ability to explain. I hope this can make the world of research papers more accessible to undergraduadtes, precocious high schoolers, and people like myself who want to deepen their education. I expect readers to follow along in the paper and look to my narration as an aid to decompress the academic phrasing and provide missing background.

Today’s paper is a foundational work in graphics from 1993, simply titled Mesh Optimization. The abstract:

We present a method for solving the following problem: Given a set

of data points scattered in three dimensions and an initial triangular

mesh M0, produce a mesh M, of the same topological type as M0 , that

fits the data well and has a small number of vertices. Our approach is

to minimize an energy function that explicitly models the competing

desires of conciseness of representation and fidelity to the data. We

show that mesh optimization can be effectively used in at least two

applications: surface reconstruction from unorganized points, and

mesh simplification (the reduction of the number of vertices in an

initially dense mesh of triangles).

If you’re unfamiliar with an “energy function,” I believe it comes from the idea in physics that systems tend to “prefer” low energy states. You can imagine the extra energy involved in exciting electrons to higher orbitals, and a model that minimizes the energy function by predicting the electron at its lowest available orbital. In operations research, it is usually called an objective function, or a cost function. In machine learning models, the objective function is some measure of the accuracy of predictions, balanced against a measure of “over-fitting,” and we see that the authors here use a similar pattern for their energy function. There will be some multiplier for the “conciseness” part or the “fidelity” part that the user of the model specifies for their desired tradeoff.

Introduction

They state the problem. Given a collection of data points in 3D space, and an initial mesh of triangles “near the data, find a mesh M of the

same topological type as M0 that fits the data well and has a small

number of vertices.” Fitting the data well means we need to measure the distance of each data point to the nearest triangle in our new mesh. I never studied much topology, outside of a few YouTube videos, but in this context I belive “the same topological type” just means the meshes have the same number of holes.

Their first example is figure 7(A,b,C), all the way at the end of the paper, and it illustrates the point well. The outer shell of the object is just a cylinder going through a cube. You could save tons of vertices by just putting a cube of 8 vertices there, but you’d be discarding important qualitative aspects of the object.

They go on to detail the energy function mentioned in the abstract, giving the tradeoff parameter I alluded to as c_rep for compact representation. Then, “We use the input mesh M0 as a starting point for a non-linear optimization process.” This is its own whole field of study, with its own software libraries.

An interlude on prior art gives some context to the problem, and I’m here for the second one, mesh simplification. Another example shows them reducing a mesh size by more than a factor of 20, which is pretty impressive to me. As a final point, they say “It demonstrates how the algorithm’s ability to recover sharp edges and corners can be exploited to automatically segment the final mesh into smooth connected components,” which I did not expect! Ready to learn more!

Mesh Representation

This section scared me at first, because I thought I would have to go learn more about topology and come back. it’s really not that far out, although some linear algebra will be indispensable here.

Formally, a mesh M is a pair (K ; V), where: K is a simplicial complex representing the connectivity of the vertices, edges, and faces, thus determining the topological type of the mesh;

What’s a simplical complex, something ducks walk on? Well, we can work it out from the context. “The 0-simplices i in K are called vertices, the 1-simplices {i; j} in K are called edges, and the 2-simplices {i; j; k;} in K are called faces.” So a simplex must be a group of related elements, and an N-simplex has N relations between those elements. Either that, or they’re N-dimensional confined objects. I’m sure There’s a more general topological meaning, but we are blessed to be working squarely in the familiar, linear 3D space.

Now we’re going from a “topological realization” to a “geometric realization.” The “standard basis vectors {e0, …, em} in Rm” are of course just vectors that represent each axis of the space, so in R3 they’re (1,0,0), (0,1,0), and (0,0,1). these are sometimes also called i, j, and k, but those letters are already taken in the definition of the “simplicial complexes.”

In programming, a mesh is represented as a list of vertices, which are points in space, and a list of indices of those vertices, which are taken three at a time to represent each triangle in the mesh. They Describe this as a linear mapping from RM to R3, where M is the number of vertices, which feels like an unnecessarily abstract way to say it. It seems like they’re setting this up to differentiate the nice meshes we’re used to seeing, from meshes where the triangles cross through each other, or where two vertices share the same position. I won’t really dwell on it, because the “topological realization” doesn’t come up in the rest of the paper. only the “topological type” is discussed.

The barycentric coordinate vector thing feels like it comes out of nowhere, but luckily I’m familiar with the convex part. A convex combination is a linear combination where the coefficients sum to 1. The simplest form is found in linear interpolation between two points, where you traverse a formula (cA + (1 – c)b) by sliding the coefficient c between 0 and 1. It should be easy to see how this confines the formula to a line segment between the two points, or a “1-simplex.” Similarly, a convex combination of the 3 points in figure 2 will produce points confined to the triangle there.

This, I think, elucidates why they went to the trouble. A general mesh in 3D space like the one in figure 7(c) won’t be convex, but they found a way to represent a mesh in a convex way. When the problem space is convex, the optimization techniques become rather convenient, especially with libraries like CVX in Matlab. It also makes sense that, because we’re replacing or deleting vertices, we need a way to elegantly track how that affects the rest of the mesh without poking new holes into it. The exact mechanism should be clarified once we get to section 4.

Definition of the Energy Function

The energy function gets 3 key terms.

“Sum of squared distances” is a common theme in fitting techniques. The paper mentioned it earlier with the shorthand “least squares.” I believe it’s the squared distance because it elides the square root operation of a traditional distance function, which can be expensive for computers. Even GPUs only have a reciprocal square root operation, which is good for normalizing vectors.

The representation energy is just the calibration coefficient described earlier, times the number of vertices. Refreshingly simple!

What is this “spring” energy? The paper motivates the idea by showing us a reult optimized on just the first two terms, with random spikes, saying, “These spikes are a manifestation of the fundamental problem that a minimum of Edist + Erep may not exist.” The idea seems to be that we simulate tension on the length of edges between the vertices, not unlike the electron excitement example I mentioned earlier. To my surprise, they suggest that the “spring constant” can be reduced as the algorithm progresses, so it’s not just another flat calibration tool.

After a brief note about scaling the mesh to fit in the unit cube, it’s on to the meat of the paper, the algorithm itself.

Minimization of the energy function

The intro of section 4 is a bit too vague for me, but it sets up the main sub-optimizations. One problem is finding the best vertex positions, subject to the constraints of the abstract relationships in the “topological realization” described earlier, and the other is coming up with a “move” to add, remove, or replace edges, producing a new toplogy to give to the first problem.

Optimize Vertex Positions

To find the distance of each point from the mesh, we first have to find its nearest point on the mesh. Every time you see the word “barycentric,” just remember that we’re expressing a point on a face in terms of the face’s vertices, and that allows us to see how it changes as we change the locations of the vertices.

The two-part strategy starts with “projecting” each point of the set we’re fitting to onto the current mesh, by finding its nearest mesh-point as a vertex-combination, or barycentric coordinate vector. Then, holding those vectors for the squared distance function on each point, we express the choice of vertices as a linear optimization problem. Let’s see how they work in detail.

Projection

You could just try each point’s distance against every face, but the complexity of that blows up quickly. The paper describes a “spatial partitioning” method, which is also used for tasks like collision detection. When we divide the points and faces among cells in space, there is a much smaller set of candidate faces to check for each point. But they take it one clever step further, assuming that, in future steps, the point will be closest to the same face, or one that is adjacent to its previous closest face, shrinking the set of candidates even further.

The math of projection is not described, but it extends from the task of projecting a point onto a plane. If the point’s projection does not lie within the triangle, the result will be on an edge or vertex. Once we have our projections in barycentric terms, we are ready to adjust vertices.

Linear Least Squares

Interestingly, they perform the optimization independently on each spatial axis. A column vector comprising the x (or y or z) coordinate of each vertex (the variables we are optimizing) is multiplied with a matrix. The “distance” and “spring” functions are joined into this sparse “design matrix” by concatenating two sets of rows. the first n (number of fit points) rows are the barycentric vectors found in the projection stage. The remaining e (number of current mesh edges) rows are the spring constant as distributed into the squared distance function, at the indices that match the vertex pairs for each edge. Sorry if that was too dense, but it’s just meant as a companion to dust off the fancy description in the paper.

For the sake of formal linear algebra, they construct the vector subtracted for squared distance, as the x (or y or z) coordinate of each fit point, followed by zeros for all the edges because the fit points aren’t involved in the spring function. Now that we have the problem in a form that works with standard methods, they use the “conjugate gradient” method. That method’s details are out of scope today, best left as the subject of othher papers, or a textbook, but I’ll briefly go into the role and spirit of it.

You’ll see “gradient descent” (synonymous with “hill climbing”) in most optimization literature, and in linear regression models in statistics. Following the direction of the gradient brings us toward a local maximum or minimum, where the derivative is zero. Some methods have a parameter that allows for overshoot, which lets the process keep looking for another local optimum that might be more valuable. In our case the objective function is quadratic, so there’s only one optimum to work toward. When it’s just a scalar quadratic function, the optimum can be found in one algebraic step. In the multivariate case, the same can sometimes be done with linear algebra, but it requires operations with O(N^2) or O(n^3) complexity.

The paper mentions QR decomposition as one such method for an exact answer, but gradient descent can be faster when exactness is unimportant or in tension with other criteria. they show that a fixed number of iterations (~200) of sparse matrix multiplication gets as close as we need, yielding a linear time solution. Woo-hoo!

Optimize mesh

Now we target the vertex-count portion of the overall energy function. The diagrams for the three “legal moves” and the definitions of boundary edges and vertices seem extra clear. The conditions for an edge collapse to be legal are a bit harder to put together.

At first glance, The first condition seems to just mean that the edge is only a part of triangles, but that’s given by the 2-simplex definition. How is it possible for a vertex k to form edges with both vertices on an edge {i, j}, and not also form a face {i, j, k} with them? On further thought, I realize that it may still look like a triangle on paper, but that doesn’t mean it’s registered as a face in the mesh. There’s no rule against the starting mesh being a sheet with a little hole in it, and that topological trait has to be preserved. It’s plain to see that collapsing the edge {i, j} would close the hole.

I can more easily see how to violate the second condition. You can picture The boundary vertices i and j as the points where a triangular gable {i, j, k} sits atop a square wall of a house. The edge {i, j} marks a line between the living space and the attic, perhaps. If you collapse that edge, the edges {i, k} and {j, k} merge into one and the house becomes an a-frame with a hanging line protruding from its tip that isn’t part of a 2-simplex.

The third condition is just a cleanup of minimal cases, for the sake of completeness. All of the meshes I care about optimizing have more than 4 vertices. The 3 vertex case is already optimal if the algorithm ever gets there, and I challenge you to construct a mesh with exactly 4 vertices, of which 2 are not on a boundary edge. I don’t think it’s possible.

Exploiting locality

Now they say “The idealized algorithm described so far is too inefficient to be of practical use.” we wouldn’t want to just try out any old legal move and then re-optimize the vertices in a random walk. we could be lookiing at time costs on the order of O(N!).

Evaluating the Effect of Legal Moves

The first time save is to only update a handful of vertices that are affected by a move, because for example collapsing an edge on one ear of a rabbit won’t change any considerations about the other ear.

Definition of a neighborhood

I think Figure 5 does a better job of explaining the definition here, but the text helps solidify the concept. It’s just weird that they switch the role of s and s' in the middle of it. The neighborood is more simply explained as “any face that the entity is a part of.”

Evaluation of Edge Collapse

As promised at the beginning of 4.3.1, we get some idea of the benefit of the collapse by optimizing the position of the vertex that the edge collapsed into, and because other vertices are not optimized, this gives us a lower bound for the true benefit. I was hoping for a closed-form optimum for that vertex position, but we’re not actually performing the vertex optimization step. we’re just ballparking the value of doing the whole step after this edge move.

I don’t quite follow the “dihedral angle of edges” heuristic for checking that the local mesh does not self-intersect, especially because they don’t give a specific threshold. I don’t see it in the parameter table or later discussion, either. Dihedral angles are a new concept for me.

The edge split evaluation is self-explanatory, just what we’ve seen above, but with less to consider.

Evaluation of Edge Swap

The edge swap evaluation follows a similar pattern as before for both vertices on the new edge {k, l}, although they don’t mention what new positions they consider. My guess is that they try each at the halfway point between them, and at their original point, based on the precedent.

Legal Move Selection Strategy

So the first legal move is completely random, the first random edge with a legal move that provides a benefit. then they say, “If one of the transformations is accepted, we update the candidate set by adding all neighboring edges,” but they also said the candidate set “initially contains all edges.” They must mean either that they remove all neighboring edges, or that the candidate set starts off with a random edge to remove, and then crawls outward from there. The lead author, Hugues Hoppe, has a video showing the algorithmm in action, and it looks like the edges are updating in all different locations.

Fortunately, Hoppe also has a link to a Github repo on his website with implementations of his work. I found the relevant code in MeshFit.cpp

void do_stoc() {

perhaps_initialize();

HH_STIMER(_stoc);

assertx(!dihfac);

if (verb >= 2) showdf("\n");

if (verb >= 1) showdf("Beginning stoc, spring=%g, fliter=%g\n", spring, fliter);

fill(opstat.na, 0);

fill(opstat.ns, 0);

fill(opstat.nor, 0);

opstat.notswaps = 0;

int ni = 0, nbad = 0, lecol = 0, lespl = 0, leswa = 0;

for (Edge e : mesh.edges()) ecand.enter(e);

while (!ecand.empty()) {

ni++;

Edge e = ecand.remove_random(Random::G);

ASSERTX(mesh.valid(e));

EOperation op;

op = OP_ecol; // dummy_init(op)

EResult result = R_illegal;

float edrss;

dummy_init(edrss);

if (result != R_success) {

op = OP_ecol;

result = try_op(e, op, edrss);

}

// do not try edge_splits under zippysimplify

if (result != R_success && fliter) {

op = OP_espl;

result = try_op(e, op, edrss);

}

if (result != R_success) {

op = OP_eswa;

result = try_op(e, op, edrss);

}

...

The try_op calls go into try_ecol, try_espl, and try_eswa, each of which will remove affected edges, perform the operation, and add neighboring edges to the hash set ecand. It seems that the expectation is that a lot of moves will be invalid or show no benefit, so after they are removed from the list of candidates, they will need to be re-added at a later iteration when conditions might make their best move more useful.

See this portion of try_ecol:

for (Vertex v : mesh.vertices(e)) {

for (Edge ee : mesh.edges(v)) ecand.remove(ee);

}

remove_face(f1);

if (f2) remove_face(f2);

mesh.collapse_edge(e); // v1 kept

// add about 12 to 16 edges

for (Edge ee : mesh.edges(v1)) ecand.add(ee);

for (Face f : mesh.faces(v1)) ecand.add(mesh.opp_edge(v1, f));

I expect vertices to touch an average of about 4 edges, so we would expect to remove 8 candidate edges and add back 12 to 16 candidate edges. This seems to comport with the video. By the time you’ve simplified a given wall enough, you only need to look at the neighborhoods of other “hotspots” of beneficial moves not connected to that wall, so its edges will generally stay out of the candidate pool.

Setting the spring constant

We finish out section 4 with some specifics about the strategy previously described for the spring constant, pointing to figure 7(f,g,h). We can definitely see how the lower constant allows for much longer triangles, and that the effect is logarithmic.

I found it interesting that some of the faces stayed the same between the middle and final iterations, though they were near a regular pattern of backgammon spikes. I suppose that’s just a side effect of the random selection and convergence. With a more demanding c_rep parameter they might have been wiped out.

Results

I gloss over the surface reconstruction part, and the most interesting mesh simplification benchmarks were in the introduction. It’s worthwhile, though, to study the table to see how the algorithm scales. It took me a minute to see how the table works, but the horizontal lines separate each data set, and the entry with a “-” for time is the input to the process. Of course computers are a lot faster now than they were in 1993, so we’re only interested in the ratios.

The law of diminishing marginal returns is plain to see in 7c-7h, where we take twice as long to get from 270 to 163 (factor of 1.6:1) as we did to get from 1572 to 270 (factor of 5.8:1). I think this is because the proportion of legal moves that improve the energy function gets very small, as the authors alluded to earlier.

Segmentation

They consider faces connected if their dihedral angle is below a given threshold, and segment the mesh by taking each island of connected faces together, showing the result in 7(i). The sides of the cube in the middle are each separate segments, so the threshold must be 90 degrees or less. But my visualized reading of dihedral angles is that two faces on the same plane would have a dihedral angle of 180. In retrospect, i figure that a plane should have an angle of 0 with itself, so i was visualizing it wrong. Rather than drawing a circle such that an edge passes through its center, and measuring the arc between the points where it passes through two faces, I should be asking how much you would have to rotate one face to give it the same orientation as the other. That sheds more light on the dihedral angle criterion for edge moves in section 4, too.

They then show how the segmentation helps us to apply different shader variants and parameters to each surface. If you have a perfectly flat surface, you only need one normal vector to have it react to lighting in a rudimentary way, so you can avoid the extra calculations required for a curved surface. Figure 7(m) is the closest thing we get to a color coding of figure 7(i).

Parameter Setting and Performance Statistics

I already looked at the table, so all that’s left to say is that I wish they had tested more values of c_rep to see how things change, but at least we saw the difference between 1E-5 and 1E-4.

Related work

I gloss over this part as well, because I was mainly interested in studying the method, but this section should not be ignored completely because it provides cues for what else to study to build out my foundation in 3D computing. I have heard of “marching cubes” before, so I may want to put that Schroeder paper, Decimation of triangle meshes, on my list.

I see no need to summarize the summary.

Looking Back

In this section I will assess the reading approach I took today and look for lessons that can be applied generally to the pursuit of research.

One of the hardest skills I’ve had to learn in my technical career, is choosing what details to ignore and what to hone on. Attention dysregulation means that by default, priorities are indistinct and novelty reigns. There can be creative benefits to this, branching out and synthesizing many ideas (breadth), but it makes mainline productivity (depth) a struggle. Writing as I go is one of my techniques to magnetize my attention to a particular goal, and I believe this “Let’s read” got me somewhere that I wouldn’t be otherwise.

Something that occured to me today is that it’s useful to keep the intended audience in mind when reading academic papers. In this case, the audience is attendees of SIGGRAPH ’93, who have something of a collective rapport in the subject area. This influences what the authors choose to dwell on and what they rush through. Recall the sentence:

A simplicial complex K consists of a set of vertices {1, …, m}, together with a set of non-empty subsets of the vertices, called the simplices of K, such that any set consisting of exactly one vertex

is a simplex in K, and every non-empty subset of a simplex in K is

again a simplex in K (cf. Spanier [14]).

This is a 56-word labyrinth, which tells me the intent is not to be instructive or revalatory. It cites to a textbook and seems to act more like a required preamble, a from topology import complex, simplex, to satisfy the mathematical statements that live on top of it, but most of the audience has already seen it. This is a pattern I’ll look for in the future, where a sentence is meant not to be read like normal, but to be optionally unfurled and linked to another resource.

Finally, I’ve learned that an author’s website can be a valuable companion resource for clearing up ambiguities or apparent contradictions in the text. Source Code, especially, acts as paratext that nails down the exact mechanics of a technique.

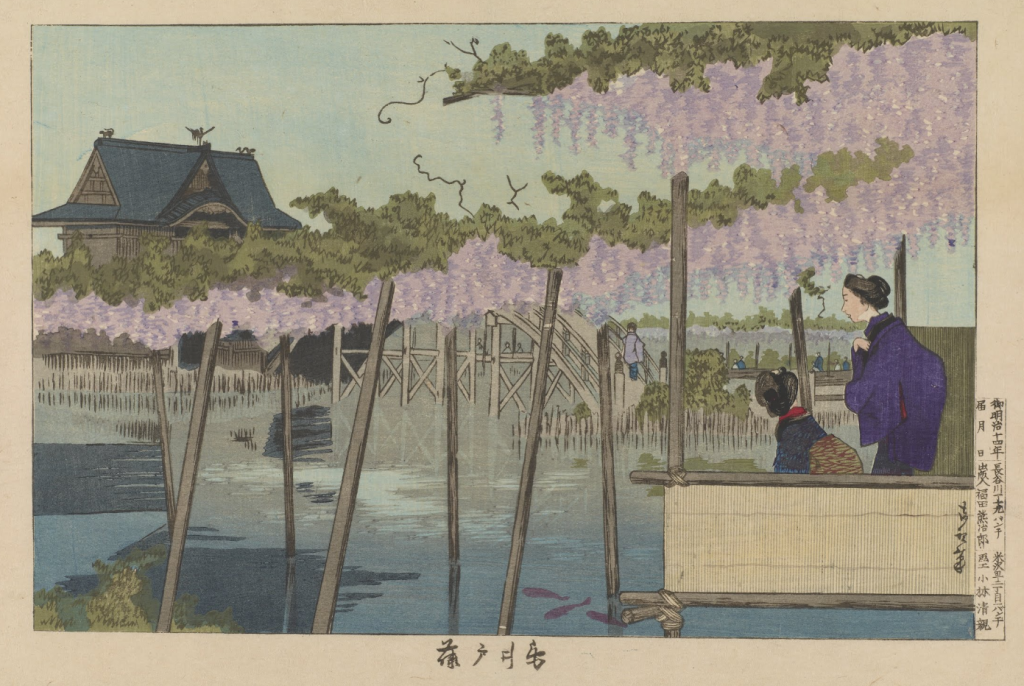

Cover art: Wisteria at Kameido, by Kobayashi Kiyochika 1881, via Google arts & culture